Latest Articles

Systems AI: The Next Evolution in Artificial Intelligence

Artificial Intelligence (AI) has undergone remarkable transformations over the past few decades, evolving from rule-based systems to machine learning and deep learning models that dominate today's applications. However, as AI systems become increasingly complex, a new paradigm is emerging: Systems AI—a holistic approach to building and managing AI models that can operate at scale, adapt to new environments, and evolve autonomously. In this blog, we’ll explore what Systems AI is, why it matters, and how it’s shaping the future of intelligent systems. What is Systems AI? Systems AI refers to an advanced AI framework that integrates machine learning, data processing, system architecture, and feedback loops to create self-improving, autonomous systems. Unlike traditional AI, which focuses on developing specific models for specific tasks, Systems AI emphasizes building entire ecosystems that can learn, adapt, and evolve over time with minimal human intervention. At its core, Systems AI involves: Automation of AI development: Automating processes like data collection, feature engineering, model selection, and hyperparameter tuning. Adaptability: Creating systems that can adjust their behavior in response to new data or changing environments. Self-healing capabilities: Enabling systems to detect and correct their own errors without human intervention. Collaboration of multiple models: Managing the interaction of different AI models within a complex system to solve multi-faceted problems. Key Characteristics of Systems AI 1. End-to-End Automation In traditional AI workflows, significant human effort is required at various stages, such as data preparation, model training, evaluation, and deployment. Systems AI seeks to automate these stages, turning AI into a fully autonomous system that can handle the entire lifecycle of model development without constant oversight. 2. Continuous Learning and Adaptation Unlike static AI models that require retraining when exposed to new data, Systems AI enables continuous learning. These systems can update themselves in real-time, ensuring they remain relevant even as their environments change. 3. Modularity and Scalability Systems AI emphasizes modular design, where individual AI components can be independently developed, tested, and upgraded. This modularity allows systems to scale seamlessly by adding or modifying specific components without disrupting the entire system. 4. Multi-Agent Collaboration Systems AI often involves multiple AI models working together to solve complex problems. For instance, in autonomous driving, different models handle tasks such as object detection, path planning, and decision-making. Systems AI ensures these models communicate effectively and work towards a common goal. 5. Self-Optimization Systems AI includes mechanisms for self-optimization, where the system continuously monitors its performance and makes adjustments to improve accuracy, efficiency, and robustness. Applications of Systems AI 1. Autonomous Vehicles Autonomous driving is one of the most prominent applications of Systems AI. These vehicles require a coordinated system of AI models to handle perception, decision-making, and control. Systems AI ensures that each model can work in harmony, adapt to new road conditions, and improve over time through real-world experience. 2. Smart Cities Smart cities rely on a network of interconnected systems to manage traffic, energy, public safety, and more. Systems AI can enable these systems to collaborate, learn from real-time data, and adapt to changing urban environments without human intervention. 3. Healthcare In healthcare, Systems AI can manage multiple models for tasks such as diagnosis, treatment recommendations, and patient monitoring. By integrating these models into a unified system, Systems AI can improve outcomes, reduce errors, and enable personalized medicine at scale. 4. Industrial Automation Industries such as manufacturing and logistics can benefit from Systems AI by creating adaptive, self-improving systems that optimize production processes, predict maintenance needs, and improve supply chain efficiency. Challenges in Building Systems AI While Systems AI offers immense potential, there are significant challenges that must be addressed: Complexity: Building and managing large-scale, interconnected AI systems is a daunting task. Ensuring that these systems remain stable and reliable is critical. Data Dependence: Systems AI requires vast amounts of diverse, high-quality data to function effectively. Managing this data and ensuring its integrity is a challenge in itself. Trust and Transparency: As AI systems become more autonomous, ensuring that they are transparent and trustworthy becomes increasingly important. Systems AI must include mechanisms for explainability and accountability. Ethical Concerns: Autonomous systems can have far-reaching societal impacts. Ensuring that Systems AI operates ethically and aligns with human values is a major concern. The Future of Systems AI The future of AI lies not in isolated models solving specific problems but in intelligent systems that can operate autonomously, learn continuously, and collaborate with other systems to solve complex, real-world challenges. Systems AI represents this future, promising advancements in areas ranging from scientific research to climate change mitigation. Key trends to watch in Systems AI include: AI-driven system design: Using AI to design and optimize other AI systems. General-purpose AI ecosystems: Creating platforms where different AI models can collaborate and adapt to a wide range of tasks. Integration with IoT: Systems AI will increasingly be integrated with Internet of Things (IoT) devices, enabling smarter homes, industries, and cities. Conclusion Systems AI is more than just an evolution of traditional AI—it’s a new way of thinking about intelligent technology. By focusing on end-to-end automation, continuous learning, and adaptability, Systems AI promises to create systems that are not only smarter but also more resilient and capable of solving the complex, interconnected problems of the modern world. As AI continues to evolve, Systems AI stands at the forefront, offering a glimpse into a future where machines don’t just execute tasks—they manage, learn, and grow autonomously.

Aalok Narayan

1/12/2025

Bell Labs: The Cradle of Modern Technology

Bell Labs: The Cradle of Modern Technology Few institutions have shaped the world of technology and communication as profoundly as **Bell Labs**. Officially known as **Bell Telephone Laboratories**, it has been at the forefront of scientific discovery and technological innovation for nearly a century. From inventing the **transistor** to developing the foundations of modern computing and communication, Bell Labs has played a pivotal role in defining the information age. In this article, we’ll explore the history, groundbreaking inventions, and lasting legacy of Bell Labs. --- ### **A Brief History** Bell Labs was established in **1925** as the research and development arm of **AT&T** and **Western Electric**. It grew out of Alexander Graham Bell’s Volta Laboratory, which was founded in the late 19th century to advance telecommunications technology. Over time, Bell Labs became known for its focus on **fundamental research**, resulting in numerous breakthroughs across various fields, including physics, materials science, and computer science. Key milestones in Bell Labs’ history include: - **1947**: The invention of the **transistor** by John Bardeen, Walter Brattain, and William Shockley, which revolutionized electronics and earned them the **1956 Nobel Prize in Physics**. - **1962**: Launch of **Telstar**, the world’s first active communication satellite. - **1980s**: Development of digital cellular technology and modern optical fiber communication. --- ### **Groundbreaking Inventions** #### **1. The Transistor (1947)** Arguably the most significant invention of the 20th century, the transistor replaced bulky vacuum tubes in electronic devices, enabling the development of smaller, faster, and more reliable electronic systems. Without the transistor, modern computers, smartphones, and virtually all electronic devices would not exist. #### **2. Information Theory (1948)** Claude Shannon, a researcher at Bell Labs, laid the foundation of modern digital communication with his groundbreaking paper, **“A Mathematical Theory of Communication”**. His work introduced the concept of the **bit** and established how information can be encoded, transmitted, and decoded with minimal loss—paving the way for data compression, error correction, and the internet. #### **3. UNIX Operating System (1969)** Bell Labs researchers, led by **Ken Thompson** and **Dennis Ritchie**, developed **UNIX**, a pioneering operating system known for its simplicity, portability, and powerful features. UNIX became the foundation for many modern operating systems, including **Linux**, **macOS**, and **Android**. #### **4. The C Programming Language (1972)** Developed by **Dennis Ritchie**, the **C programming language** was designed alongside UNIX and became one of the most widely used programming languages. It influenced countless other languages, including C++, Java, and Python, and remains a cornerstone of software development. #### **5. Fiber Optics and Communication Technology** Bell Labs played a key role in developing **optical fiber communication**, which revolutionized telecommunications by enabling high-speed, long-distance data transmission. #### **6. Digital Signal Processing** Bell Labs pioneered **digital signal processing (DSP)**, which is fundamental to modern audio, video, and communication technologies. DSP techniques are used in applications ranging from mobile phones to digital media players. --- ### **Nobel Prizes and Recognitions** Bell Labs’ commitment to research excellence has resulted in **nine Nobel Prizes**, awarded for achievements in physics, chemistry, and economics. Notable laureates include: - **John Bardeen, Walter Brattain, and William Shockley (1956)** for the invention of the transistor. - **Arno Penzias and Robert Wilson (1978)** for the discovery of the **cosmic microwave background radiation**, providing strong evidence for the Big Bang theory. - **Steven Chu (1997)** for developing methods to cool and trap atoms using laser light. --- ### **Impact on Modern Technology** The innovations developed at Bell Labs have had a lasting impact on modern technology and society: 1. **Computing and Software**: The creation of UNIX and the C programming language laid the foundation for the modern software industry. 2. **Telecommunications**: Advances in digital telephony, fiber optics, and satellite communications enabled the global connectivity we take for granted today. 3. **Semiconductor Industry**: The invention of the transistor gave birth to the semiconductor industry, which powers everything from microprocessors to memory chips. 4. **Scientific Research**: Bell Labs' work in fundamental science, such as Shannon’s information theory and the discovery of cosmic background radiation, has influenced numerous fields, from cryptography to cosmology. --- ### **The Modern Bell Labs** In **2006**, Bell Labs became part of **Alcatel-Lucent**, and in **2016**, it was acquired by **Nokia**. Today, **Nokia Bell Labs** continues to conduct cutting-edge research in areas such as 5G, artificial intelligence, and quantum computing. While its focus has shifted from pure research to more applied innovation, Bell Labs remains a hub of technological advancement. Recent projects include: - **6G research**: Bell Labs is leading efforts to define and develop the next generation of wireless communication. - **AI-driven networks**: Bell Labs is working on AI-based solutions for more efficient network management and automation. --- ### **Conclusion** Bell Labs’ contributions to science and technology are unparalleled. From inventing the transistor to developing the UNIX operating system, its innovations have shaped the modern world in profound ways. It stands as a testament to the power of scientific curiosity, interdisciplinary collaboration, and long-term investment in research. As Bell Labs continues to push the boundaries of what’s possible, its legacy lives on in every smartphone, computer, and digital communication system we use today. ---

Aalok Narayan

1/12/2025

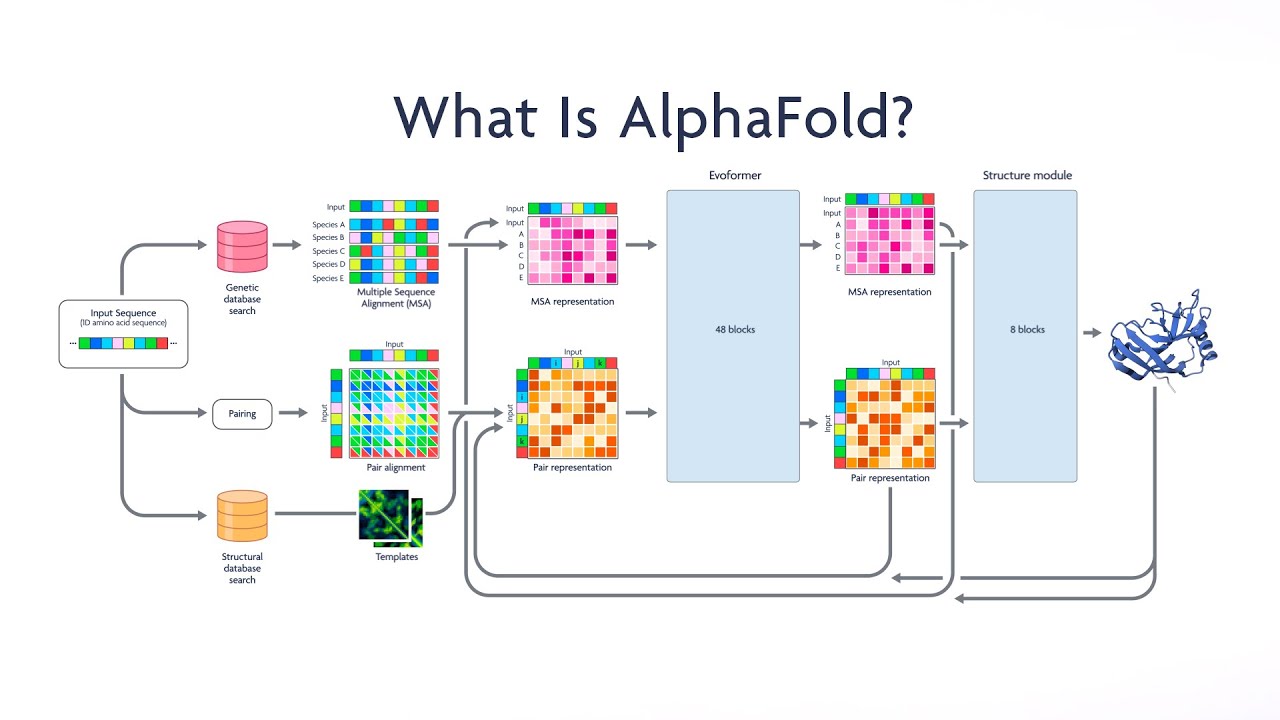

Google AlphaFold: Revolutionizing Biology with AI

## **Google AlphaFold: Revolutionizing Biology with AI** In recent years, artificial intelligence (AI) has transformed numerous fields, from language processing to autonomous driving. However, one of the most groundbreaking AI achievements came from **DeepMind**, Google’s AI research arm, with the creation of **AlphaFold**—an AI system that can accurately predict protein structures. The significance of AlphaFold cannot be overstated, as it solves a 50-year-old grand challenge in biology and opens new avenues in medicine, drug discovery, and biotechnology. --- ### **The Protein Folding Problem** Proteins are the building blocks of life. They are composed of long chains of amino acids that fold into complex three-dimensional structures, which determine their function. Understanding the 3D structure of a protein is crucial for: - **Developing new drugs**: Many diseases occur due to malfunctioning proteins. Knowing a protein’s structure helps design targeted treatments. - **Biotechnology**: Engineers can design synthetic proteins with specific functions for industrial, medical, and agricultural applications. - **Understanding life at the molecular level**: Structural biology provides insights into fundamental biological processes. However, predicting how a protein folds from its amino acid sequence has been a notoriously difficult problem. Experimental methods like X-ray crystallography and cryo-electron microscopy are expensive and time-consuming, often taking months or even years to determine a single protein structure. --- ### **How AlphaFold Works** AlphaFold approaches the protein folding problem using **deep learning**. Its architecture incorporates key ideas from both structural biology and machine learning, allowing it to predict the 3D structure of a protein based solely on its amino acid sequence. #### **Key Components of AlphaFold** 1. **Input Data** AlphaFold takes an amino acid sequence as input. To enhance its predictions, it uses multiple sequence alignments (MSAs) and templates from known protein structures. 2. **Evoformer Block** A core component of AlphaFold is the **Evoformer**, a deep neural network that processes the MSA and pairwise information to infer relationships between different parts of the protein sequence. It captures patterns and evolutionary signals from the data. 3. **Structure Module** After processing by the Evoformer, the information is passed to the **Structure Module**, which predicts the 3D coordinates of the protein’s atoms. The model iteratively refines its prediction to produce highly accurate structures. 4. **Training** AlphaFold was trained on publicly available protein databases, using supervised learning to predict known structures and self-consistency techniques to generalize to unseen proteins. --- ### **AlphaFold’s Performance** AlphaFold stunned the scientific community in **2020**, when it competed in the **Critical Assessment of Structure Prediction (CASP) 14**, a biennial competition that tests the ability of computational models to predict protein structures. AlphaFold achieved a median Global Distance Test (GDT) score of **92.4**, where a score above **90** is considered comparable to experimental accuracy. --- ### **Impact of AlphaFold** AlphaFold’s success has far-reaching implications: #### **1. Accelerating Drug Discovery** With accurate protein structures available, researchers can now design drugs more efficiently by targeting specific proteins associated with diseases. For example, AlphaFold could play a crucial role in developing treatments for cancers, Alzheimer’s, and infectious diseases. #### **2. Advancing Genomics** The human genome encodes thousands of proteins, many of which had unknown structures. AlphaFold’s predictions have now provided structural insights for most of these proteins, greatly enhancing our understanding of human biology. #### **3. Synthetic Biology and Biotechnology** AlphaFold enables scientists to design new proteins with tailored functions, which could be used in applications such as creating biodegradable materials, engineering enzymes for industrial processes, or developing biosensors. #### **4. Solving Mysteries in Basic Science** AlphaFold can help answer fundamental questions in biology, such as how specific proteins interact and function in complex biological systems. --- ### **AlphaFold Database** In **July 2021**, DeepMind, in collaboration with the **European Molecular Biology Laboratory’s European Bioinformatics Institute (EMBL-EBI)**, launched the **AlphaFold Protein Structure Database**, providing open access to predicted structures of over **200 million proteins** from various organisms. This database has become an invaluable resource for scientists worldwide. --- ### **Challenges and Future Directions** Despite its remarkable achievements, AlphaFold is not without limitations: 1. **Protein Complexes**: While AlphaFold excels at predicting individual protein structures, predicting the structures of protein complexes (how proteins interact with each other) remains a challenge. 2. **Dynamic Behavior**: Proteins are not static—they often change shape to perform their functions. AlphaFold predicts static structures, so understanding dynamic behavior is an area for future improvement. 3. **Accuracy for Low-Confidence Predictions**: Although AlphaFold achieves high accuracy for many proteins, predictions with low confidence scores require further validation through experimental methods. #### **Future Directions** - Improving AlphaFold’s ability to predict protein-protein interactions. - Enhancing its predictions for membrane proteins, which are critical drug targets. - Extending its capabilities to RNA and other biomolecules. --- ### **Conclusion** AlphaFold represents a monumental leap forward in the application of AI to scientific discovery. By solving the decades-old protein folding problem, it has transformed the fields of molecular biology, drug discovery, and biotechnology. As researchers continue to build on AlphaFold’s achievements, we can expect a wave of new scientific insights and innovations that will have a lasting impact on medicine and technology. DeepMind’s AlphaFold is a shining example of how AI can be harnessed to tackle the world’s most complex challenges, unlocking possibilities that were once beyond reach. ---

Aalok Narayan

1/12/2025

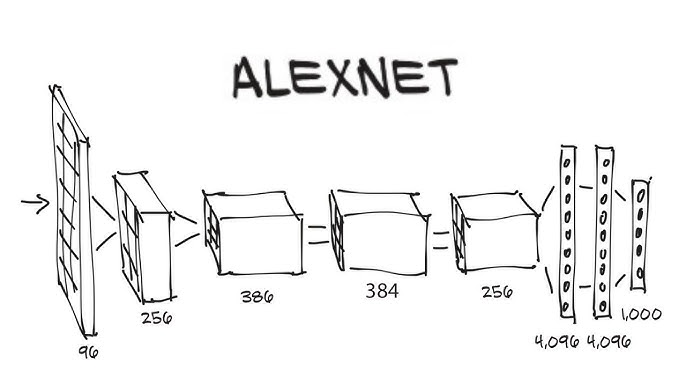

AlexNet: Pioneering the Deep Learning Revolution

### **AlexNet: Pioneering the Deep Learning Revolution** In the world of deep learning, few models have had as significant an impact as **AlexNet**, the neural network that sparked a revolution in computer vision and inspired the rapid development of deep learning technologies. Introduced by **Alex Krizhevsky**, **Ilya Sutskever**, and **Geoffrey Hinton** in **2012**, AlexNet was the first model to demonstrate the effectiveness of deep convolutional neural networks (CNNs) on large-scale image classification tasks. This blog explores the architecture, key innovations, impact, and legacy of AlexNet. --- ## **The Breakthrough: ImageNet Challenge 2012** The turning point for AlexNet came during the **ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2012**. The goal of this competition was to classify images from the **ImageNet dataset**, which contains over **1.2 million images** across **1,000 categories**. Before AlexNet, traditional machine learning models and hand-crafted feature extractors dominated the field, achieving error rates around **25%**. AlexNet shattered expectations by achieving an error rate of just **15.3%**, a monumental improvement that brought CNNs into the spotlight. --- ## **AlexNet Architecture** AlexNet’s architecture builds upon the fundamental ideas of earlier CNNs, such as **LeNet-5** by Yann LeCun, but scales them to handle large datasets and complex images. ### **Key Components of AlexNet** 1. **Input Layer** The input to AlexNet is an image of size **227x227x3** (width, height, and RGB channels). The model processes these large images to extract meaningful patterns for classification. 2. **Convolutional Layers** AlexNet consists of **five convolutional layers**, each followed by a non-linear activation function (ReLU). Convolutional layers apply filters to input images to detect features such as edges, textures, and complex shapes. - Layer 1: 96 filters of size **11x11**, stride 4, output **55x55x96**. - Layer 2: 256 filters of size **5x5**, output **27x27x256**. - Layers 3–5: Smaller filters (**3x3**) for deeper feature extraction. 3. **ReLU Activation** Unlike traditional activation functions like sigmoid or tanh, AlexNet uses **Rectified Linear Units (ReLU)**, which accelerates training by mitigating vanishing gradient problems and enabling faster convergence. 4. **Max Pooling Layers** After some convolutional layers, **max pooling** is applied to reduce the spatial dimensions of the feature maps, ensuring that only the most important features are retained. This helps reduce overfitting and computational load. 5. **Fully Connected Layers** After the convolutional and pooling layers, AlexNet includes **three fully connected layers**, with the final layer outputting probabilities for each of the **1,000 classes**. 6. **Dropout Regularization** AlexNet employs **dropout** (with a probability of 0.5) in the fully connected layers to prevent overfitting. Dropout randomly deactivates neurons during training, making the network more robust. --- ## **Key Innovations in AlexNet** 1. **GPU Acceleration** One of the major reasons AlexNet succeeded where earlier CNNs failed was its use of **GPU acceleration** for training. The network was trained using **two NVIDIA GTX 580 GPUs**, which significantly reduced training time and allowed AlexNet to process large datasets like ImageNet. 2. **ReLU Activation** The introduction of **ReLU** as the activation function was a crucial innovation. ReLU accelerates convergence by several times compared to older functions like sigmoid and tanh. 3. **Data Augmentation** To improve generalization and prevent overfitting, AlexNet used **data augmentation** techniques such as random cropping, horizontal flipping, and image intensity adjustments. 4. **Dropout** Dropout was a novel regularization technique at the time. By randomly dropping neurons during training, it helped AlexNet generalize better and avoid overfitting despite its large size. --- ## **Impact of AlexNet** AlexNet’s success in the ImageNet challenge was a turning point for deep learning. It demonstrated that: - **Deep learning models can outperform traditional machine learning methods** when applied to large datasets. - **GPU acceleration is essential** for training large neural networks efficiently. - **Data augmentation and dropout** can significantly improve model generalization and performance. Following AlexNet’s victory, deep learning became the dominant paradigm in computer vision, leading to rapid advancements in image recognition, object detection, and segmentation. --- ## **Legacy and Further Developments** AlexNet paved the way for more sophisticated deep learning models, including: - **VGGNet (2014)**: A deeper network with smaller filters. - **GoogLeNet (2014)**: Introduced inception modules for computational efficiency. - **ResNet (2015)**: Solved the vanishing gradient problem in very deep networks using residual connections. Today’s models, such as transformers and Vision Transformers (ViTs), owe their success to the foundational work done by AlexNet and its architects. --- ## **Conclusion** AlexNet was more than just a neural network—it was a breakthrough that changed the trajectory of AI and machine learning. By combining innovations in architecture, training techniques, and hardware acceleration, AlexNet demonstrated the true potential of deep learning. Its influence is still felt today, as modern neural networks continue to evolve and push the boundaries of what’s possible in artificial intelligence. ---

Aalok Narayan

1/12/2025

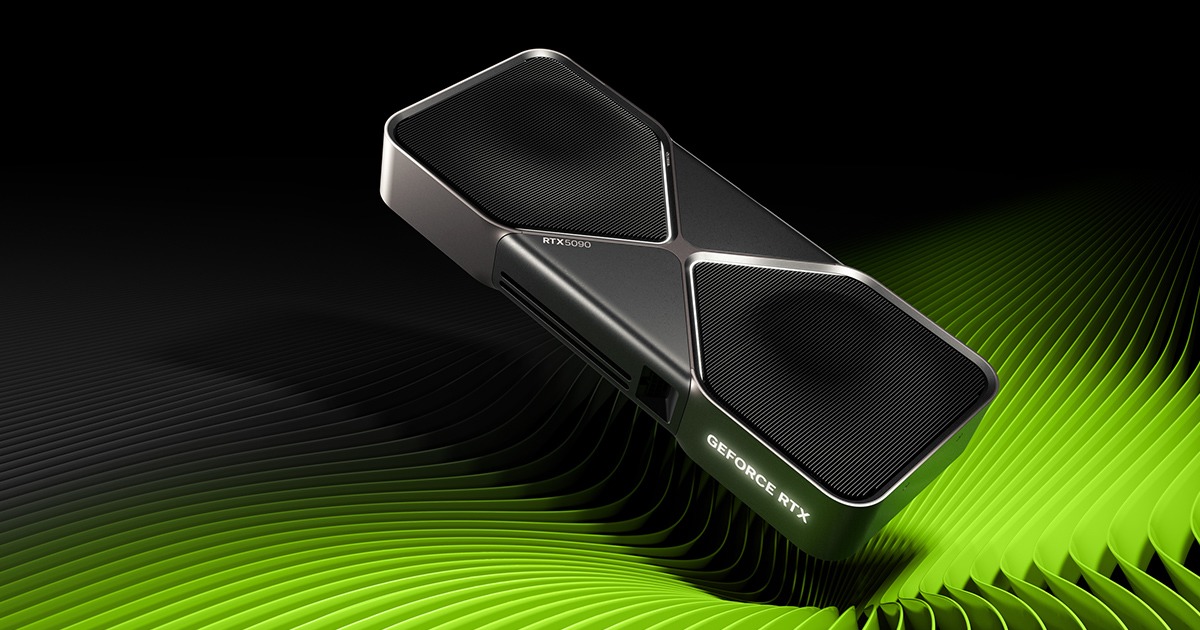

NVIDIA 50 Series GPUs: A New Era of Graphics Performance

# **NVIDIA 50 Series GPUs: A New Era of Graphics Performance** NVIDIA has once again raised the bar with the release of its 50 Series GPUs. Known for consistently pushing the limits of graphics technology, NVIDIA’s new series introduces a host of innovations that promise to revolutionize gaming, creative workloads, and AI-driven applications. With improved power efficiency, cutting-edge architecture, and expanded AI capabilities, the 50 Series is already generating massive excitement in the tech world. ## **Key Innovations in the 50 Series** ### 1. **Next-Gen Architecture: Ada Lovelace 2.0** At the heart of the NVIDIA 50 Series GPUs is the second generation of the Ada Lovelace architecture. Building upon the success of its predecessor, Ada Lovelace 2.0 brings significant improvements in computational power and ray-tracing performance. The new architecture is designed with enhanced AI cores and Tensor cores, allowing developers to harness the power of real-time machine learning and neural rendering. - **Ray-Tracing 3.0**: The 50 Series introduces Ray-Tracing 3.0, delivering even more realistic lighting, shadows, and reflections with improved hardware acceleration. - **DLSS 4.0**: NVIDIA’s Deep Learning Super Sampling (DLSS) technology has reached its fourth iteration, offering unmatched frame rates and image quality, even in graphically demanding games. ### 2. **Double the VRAM, Better Memory Bandwidth** High-end models in the 50 Series come with up to **48GB of GDDR7 VRAM**, effectively future-proofing them for 8K gaming and high-resolution 3D rendering. With significantly faster memory bandwidth, the GPUs can handle massive datasets and textures effortlessly. ### 3. **Unprecedented AI Performance** NVIDIA has equipped the 50 Series with its most advanced AI cores to date. These cores enhance everything from real-time rendering in games to AI-driven content creation. With the growing demand for AI in video editing, 3D modeling, and virtual production, the 50 Series promises to be a game-changer for professionals in creative fields. ### 4. **Efficiency Redefined: 3nm Process Node** One of the standout features of the 50 Series is its use of TSMC’s **3nm process node**, resulting in a significant leap in power efficiency. Compared to the previous generation, users can expect up to **30% lower power consumption** for the same performance levels. This makes the 50 Series ideal for high-performance gaming laptops and small form-factor PCs. ## **Models and Specs** Here’s a quick breakdown of the initial models in the NVIDIA 50 Series lineup: | **Model** | **VRAM** | **CUDA Cores** | **Base Clock** | **Boost Clock** | **Power Draw** | |------------------|----------|----------------|----------------|-----------------|----------------| | RTX 5090 Ti | 48GB GDDR7 | 24,000 | 2.0 GHz | 2.7 GHz | 450W | | RTX 5090 | 32GB GDDR7 | 20,000 | 1.8 GHz | 2.5 GHz | 400W | | RTX 5080 | 24GB GDDR7 | 16,000 | 1.7 GHz | 2.4 GHz | 350W | | RTX 5070 | 16GB GDDR7 | 12,000 | 1.6 GHz | 2.2 GHz | 300W | NVIDIA also hinted at a **5090 Titan** variant, rumored to come with 64GB of VRAM and a whopping 32,000 CUDA cores. ## **Performance Benchmarks** Early benchmarks indicate that the RTX 5090 outperforms its predecessor, the RTX 4090, by up to **70% in 4K gaming** and **60% in AI workloads**. The DLSS 4.0 technology alone accounts for a substantial boost in frame rates, making previously unplayable 8K games a reality. In productivity tasks like video rendering and machine learning, the improved AI cores deliver a **2x speedup** in model training times and real-time rendering for virtual reality applications. ## **Cooling and Thermal Solutions** The 50 Series GPUs come with redesigned cooling solutions, featuring vapor chambers and next-gen axial fans. NVIDIA claims these innovations reduce thermal throttling by 20% compared to the 40 Series. Custom water blocks for the 5090 and 5080 models are also being developed by leading manufacturers for extreme overclockers. ## **Gaming Experience Redefined** For gamers, the NVIDIA 50 Series means ultra-smooth gameplay, even with ray-tracing maxed out. AAA titles like *Cyberpunk 2077* and *Starfield* will benefit from the new Ray-Tracing 3.0 tech, while DLSS 4.0 ensures you can enjoy 120+ FPS at 4K resolutions. With support for **8K HDR at 240Hz**, the 50 Series is ready to push the boundaries of gaming monitors and TVs. VR enthusiasts will also benefit from reduced latency and higher frame rates, making the 50 Series a go-to choice for immersive VR gaming. ## **The Future of AI-Powered Creativity** Beyond gaming, NVIDIA’s 50 Series GPUs are set to accelerate AI research and creative workflows. Content creators can look forward to faster video editing, real-time photorealistic rendering, and seamless motion graphics design. Applications like Blender, Adobe Premiere Pro, and DaVinci Resolve are already being optimized for the 50 Series, promising improved productivity across the board. With the increasing use of AI tools in content creation, the new Tensor cores will prove invaluable for tasks such as AI-driven upscaling and neural texture generation. ## **Pricing and Availability** NVIDIA has announced that the 50 Series GPUs will be available starting **Q2 2025**, with prices ranging from **$899 for the RTX 5070** to **$2,499 for the RTX 5090 Ti**. While these prices are premium, the performance leap and technological advancements justify the investment for gamers, professionals, and AI enthusiasts alike. --- ## **Conclusion: Worth the Upgrade?** The NVIDIA 50 Series GPUs represent a significant leap forward in graphics performance and efficiency. Whether you’re a gamer looking for ultra-high frame rates, a content creator seeking faster render times, or an AI developer needing powerful computational performance, the 50 Series delivers on all fronts. With innovations in ray tracing, AI, and energy efficiency, NVIDIA is not just setting a new standard for GPUs—it’s shaping the future of computing.

Aalok Narayan

1/12/2025

Why You Shouldn’t Worry About “The Age” of AI

When people talk about artificial intelligence, they often throw around phrases like “We’re in the early days of AI” or “AI has reached its golden age.” Sounds profound, doesn’t it? But here’s the thing: obsessing over which “age” of AI we’re in is kind of pointless. It’s like asking if you should buy an iPhone based on whether it’s the golden age of smartphones. See where I’m going with this? Let’s break it down. Technology Evolves, Always AI, like any technology, doesn’t have an endpoint. It’s not a book where you finish Chapter 10, and suddenly, “Ta-da! It’s the final age!” Technology is an ongoing process, a constant loop of discovery, innovation, and adaptation. Take the internet, for example. People called the early 2000s the “dot-com boom.” Now, almost two decades later, we’re living in an era of cloud computing, blockchain, and the metaverse. Did the internet stop evolving? Nope. AI is the same—it’ll keep shifting, advancing, and branching into new areas. Instead of worrying whether we’re “too early” or “too late,” the question to ask is: What can I do with AI right now? Focus on Application, Not the Timeline When the printing press was invented, people didn’t sit around arguing about whether they were in the “Golden Age of Books.” They just started printing stuff. The same goes for AI. Whether it’s 1956 (when the term AI was first coined) or 2024, what matters is how we use it today. Ask yourself: How can AI make my work smarter, faster, or more impactful? Whether it’s automating repetitive tasks, brainstorming creative ideas, or analyzing data, AI isn’t waiting for the perfect era to change the game. It’s already here, and it’s a tool you can use. Hype Can Be Distracting Let’s be honest: every tech trend comes with a dose of hype. Remember when 3D printing was supposed to change everything? Sure, it’s a great technology, but it didn’t exactly revolutionize how we eat breakfast. AI’s hype cycle can make it tempting to get caught up in what’s next—AGI, quantum AI, AI consciousness. But the future of AI isn’t a race to some mythical “peak.” It’s a journey. And, spoiler alert: you’re already part of it. The cool part? You don’t need to predict the future to make an impact. AI is already enabling better customer support, smarter health diagnostics, and even creative endeavors like writing this blog post (wink wink). History Doesn’t Wait for Permission If everyone waited for the “right time” to dive into AI, innovation would stagnate. The pioneers of AI weren’t sitting around wondering if they were early or late—they were too busy experimenting, failing, and learning. The takeaway? Stop worrying about whether AI is too new, too old, or too hyped. Dive in. Experiment. Build. Because the best part about technology isn’t its age—it’s the opportunities it creates. Wrapping Up The age of AI doesn’t matter. What matters is how you choose to engage with it. You don’t need to be an AI researcher or a tech guru. You just need curiosity and a willingness to explore. AI isn’t a trend; it’s a tool. It’s not about the “age” we’re in—it’s about what you do in it. So stop worrying about timelines and start asking yourself: How can I make AI work for me today?